Policy Gradient Methods: Basics and Modern Extensions (Proseminar)

Organizers

Co-organizers

Jasper Hoffmann and Yannick Vogt

Description

|

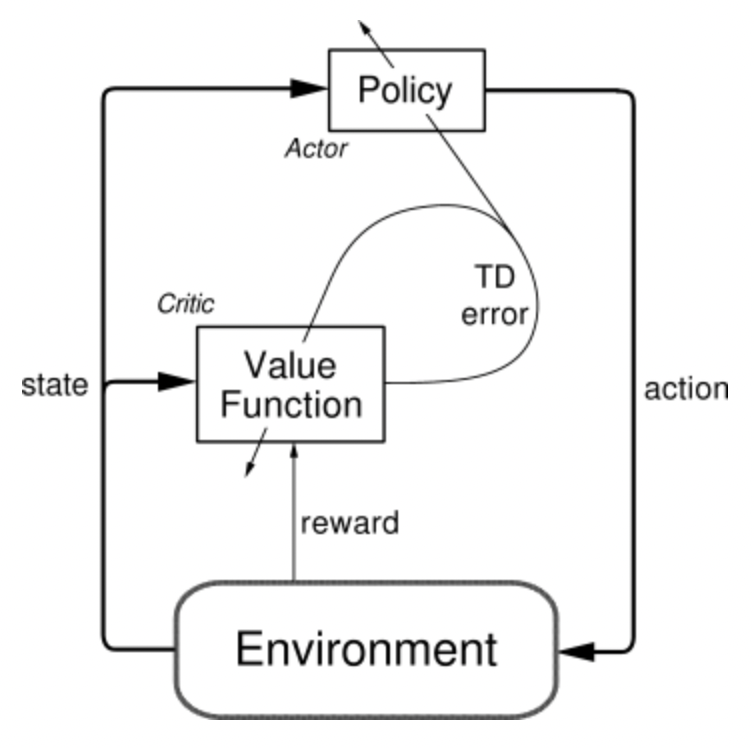

In this Proseminar, we will give a general introduction to Reinforcement Learning (RL) and Policy Gradient methods. We will first cover the classic REINFORCE and Actor-Critic algorithms. From there the participants will then present different modern extensions to these two algorithms, see the topics list below. Additionally, we will provide code such that the participant can try out the algorithm on a simple example. Finally, we will introduce general aspects of scientific presentations and academic honesty. |

|

Course information

| Details: |

Course number: 11LE13S-510-27

Places: 8

Lectures will be in hybrid format: Meeting room 42 on the 1st floor of the new IMBIT building (Georges-Köhler-Allee 201). This is right next to the kitchen area. Zoom session details (can be used for all meeting if you cannot attend in person):

https://uni-freiburg.zoom.us/j/63820890181?pwd=UEdnR2xnUFkzSzZXOEY1K1ZxbWlLQT09 Meeting ID: 638 2089 0181 |

| Course Schedule: |

Introduction: Thursday, April 20th, 16:00 c.t. Lecture on how to present: Thursday, May 25th, 14:00 (Updated!) RL Introduction: We will provide you with relevant reads and lecture recordings, which will be discussed with your supervisors in two Q&A sessions. (Updated!) Poster Session: Thursday, July 20th Deadline for Poster Print: Monday, July 17th at midnight. If you are too late, you will need to print the poster on your own. Deadline Report: Friday, September 1st |

| Requirements: | No prior knowledge of reinforcement learning is necessary. We will introduce the basics to get you up to speed so that you will be able to understand the assigned papers (with the help of your supervisor). |

| Selection Procedure: | Candidates are prioritised if they are present at the introduction event or write a motivational mail to both co-supervisors until Monday, April 24th 12am (updated!!!). We will also provide a recording of the introduction. After you have been confirmed, you will then bid on a topic by ranking all the listed topics below until Friday, May 26th. |

| Resources: | Poster guideline as pptx or pdf |

Topics

- A Minimalist Approach to Offline Reinforcement Learning (Offline RL, TD3)

- Asynchronous Methods for Deep Reinforcement Learning (A2C, A3C)

- Deep Reinforcement Learning that Matters (Reproducibility)

- Continuous Control With Deep Reinforcement Learning (DDPG)

- Addressing Function Approximation Error in Actor-Critic Methods (TD3)

- Uncertainty-driven Imagination for Continuous Deep Reinforcement Learning (Model-based RL, TD3)

- Proximal Policy Optimization Algorithms (PPO)

-

Soft Actor-Critic: Off-Policy Maximum Entropy Deep Reinforcement Learning with a Stochastic Actor (SAC)